Don’t ask to your Network Administrator: could you handle all my VLAN and security starting from applications? He never say yes! But there are some easy ways that could be taken to gain flexibility deploying and operating network connections between VMs and the external world:

- Use a Business Process software with REST Api: depending on your environment this process could take hour and days to being deployed and many prayers to make “secure” your environment

- Use CLI and gain the access to every datacenter switches

- Use NSX

The last is what every virtualization administrators are looking for but the major part of networking admin are not safe to approve! And this is why I’m writing this post.

All starts from application… but ends soon with Network administrator

One of the most important element that every system administrator must consider during infrastructure design is the application perspective: the only contact point with the development staff! Depending on the experiences of your dev engineer, you should be able to get some important application characteristics like:

- capacities

- functional diagrams

- performance metrics

But there is a forever missed argument on your interview that often cause a security problem:

- network connections (LAN)

- ports and sessions

- service availability

They always say: networks and security are up to you and the network team! Before NSX this was the exasperation of the project interviews, and you, the poor infrastructure administrator, are acting as “the man in middle” to try to fit what was missing with the environment you are working on!

Giving a security perspective, the top from application perspective is:

- segment every connection

- handle every traffic under the magnifying glass

- change the network topology and provide the isolation as provided by gap air

- don’t change network topology in test and DR environment

From systems perspective working in datacenter means:

- simplify where is possible

- introduce security on every network segment

- centralize the management

There are some challenges near some worries that must be covered using the technology and this is the case where software defined paradigm aid to build a flexible and secure virtual datacenter.

Hardware (not)commodity?

Traditional datacenter net deployment is composed by:

- firewall/router

- switch (2n per every rack)

- server and storage

Firewall/router and L3 switch on top of the scheme represent the single point of failure of the entire network (for some manager is the way to spend a lot of budget!). Talking about connection flows: cps (connections per second) and throughput are the two main critical factors to consider to avoid networking congestions. For every connection starting for VM and ending to another VM, quite all physical network devices are involved in linking and controlling every packet, even if the traffic doesn’t go outside the vDC. There is a “waste” of resources!

Let NSX exceeds the limits with VXLAN

From more and more literature about NSX there is a scheme that shows every component from management plane to data plane. Let’s take a look here: https://communities.vmware.com/docs/DOC-30645 There are 3 key elements that are involved in data flow optimization:

- VXLAN

- DLR Distributed Logical Router

- DFW Distributed Firewall

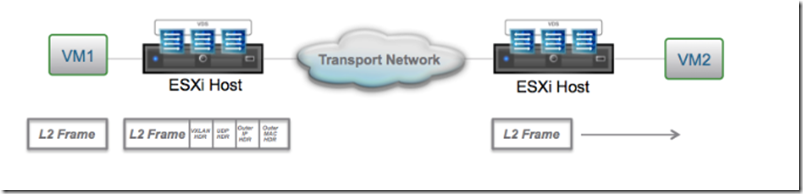

VXLAN could be defined as a L2 in a L3 connection, build to virtualize network traffic inside physical or logical connection (https://tools.ietf.org/html/rfc7348). During NSX host preparation one vib enables virtual switches through a vmkKernel interface to use this “service” and start deploying logical connection starting from index 5000 (it’s a convention used to separate VXLAN index from VLAN: a value greater than 4096). The prerequisites are:

- all host in the cluster must be connected to a common distributed switch

- MTU for physical and virtual network elements must be set > 1550 (1600 is the default)

- NIC teaming policy design must be the same for every portgroup in the distributed switch

Using a transport zone, a global connection between every hosts involved in one or more VXLAN connections, every hosts through vmkernel service, called VTEP, could establish a network connection like happens in physical world. In other words every packet generated by a single VM follows this sequence:

- Through virtual nic the packet arrives to the VXLAN port group (generated after VXLAN segment deployment aka logical switch deployment) which is handled by the VTEP

- VTEP relative to the Host which handle MAC address tables and MAC address resolution.

- If destination VM is in the same host, the “link” will be established with no traffic outside the host, otherwise, through Transport zone, packet is encapsulated and transmitted to the other ESXi host, which is handling the VM

- VTEP of the other host de-capsulate the packet and bring it to destination VM.

Source: https://communities.vmware.com/docs/DOC-27683

Apparently this could be a complication of the “standard” way to make networking in a virtual environment… but let’s see the benefits:

- No physical intervention: all traffic flows through a single VLAN!

- Bypass the limit of 4095 VLAN

- Take the control of the entire networking through a unique interface, because every logical switch has more than a simple connection mechanism… let’s see in the next paragraph!

Let NSX routes your packets with DLR

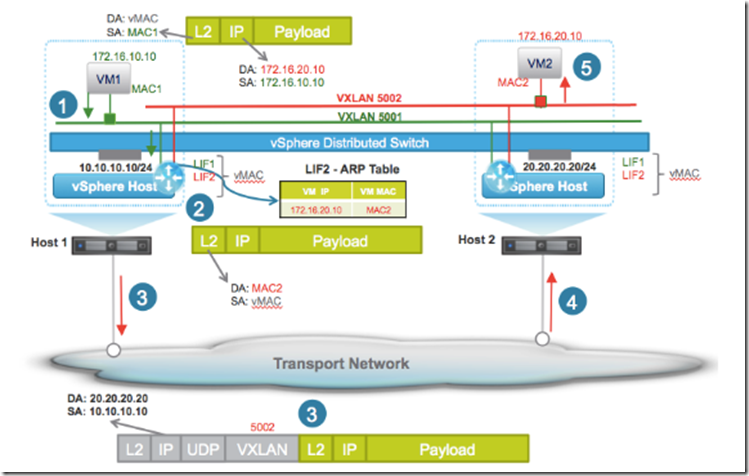

Suppose you’re going to handle connections between two VM connected to two separated VXLAN segments:

Source: https://communities.vmware.com/docs/DOC-27683

Near ARP table, there is a vSwitch vib called DLR that handles (and shares) a routing table. The new sequence from VM1 to VM2 will be

- The packet flows through VXLAN portgroup (aka logical switch portgroup) and arrives to the default gateway located to local DLR

- A routing lookup is performed at local Distributed Local Router which indicates the destination logical interface (LIF) logically connected to destination VXLAN segment, and a lookup ARP table is performed to determine the VM destination MAC address (ARP requests could be done is ARP table doesn’t contain the resolved IP address)

- If the VM is placed outside Host the packed is encapsulated and sent to destination Host, otherwise the packet is sent to destination VM without flowing out the host.

- The destination VTEP de-capsulate the packet and send it to destination VM

Do you remember the same situation the In physical world? Single or multiple router, with a single connections per second limit, must be deployed… and large amount of traffic will be flowed across every switches places between hosts and router. In the current case no traffic goes outside the transport network!

Let NSX secure your traffic with DFW

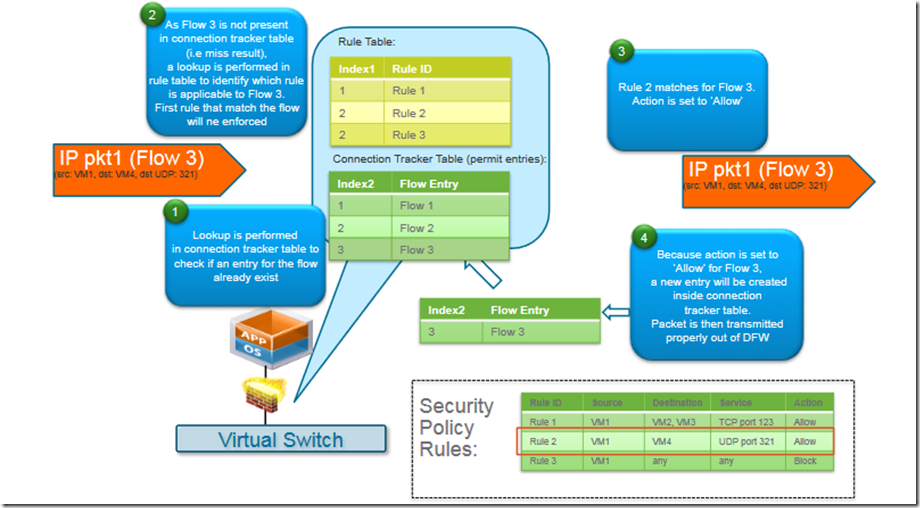

Last but not the least is the firewalling check in every segment to protect north-south and east-west traffics. With a similar mechanism of DLR, every packet is checked by Distributed Firewall (L2-L4 stateful), using a Rule Table and a Connection Tracker Table to allow or deny connections before the packet is going out of logical switch.

For every packet sent and/or received by a VM:

- a connection tracker lookup is done to check if an entry of the flow already exists

- if no entry has found, a rule table lookup is done to verify which rule is applicable

- if action is Allow the packet flows outside the DFW

Source: https://communities.vmware.com/docs/DOC-27683

Note that no traffic is generated in every direction in order to analyze and permit/deny the traffic. In old physical deployment this is could not be happened because firewall connection is after switch connection. The result is that every flow generates a lot of connection and the core firewall must be well sized in order to guarantee security and availability.

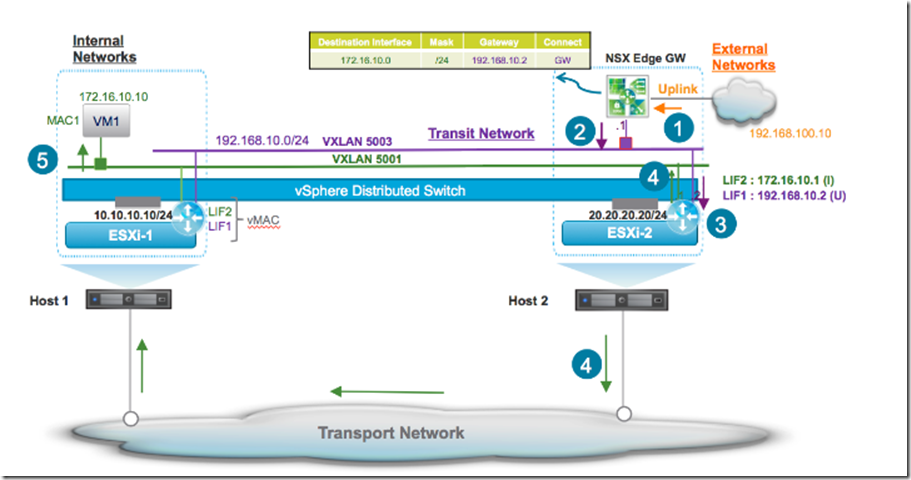

Physical World connections at the “Edge” and more

VXLAN, DFL and DFW are involved in east-west traffic to realize a resilient and efficient networking cluster. Using NSX EDGE it could be possible to handle traffic and services outside the vDatacenter: the north-south connection. Let’s see an example:

http://blog.linoproject.net/wp-content/uploads/2017/04/image-17.png

Source: https://communities.vmware.com/docs/DOC-27683

The combination of DLR and VXLAN could be used to realize east-west traffic and the EDGE is used to route packets in a portgroup which is physically connect to a switch. Out of the box EDGE is a virtual Router/Firewall (a VM) that brings many services like firewall rule and routing table but also NAT, load balancing and VPN. Be in a virtual environment gains the advantage to interconnect VM with a “software link” that could be used to make a bridge for the physical world.

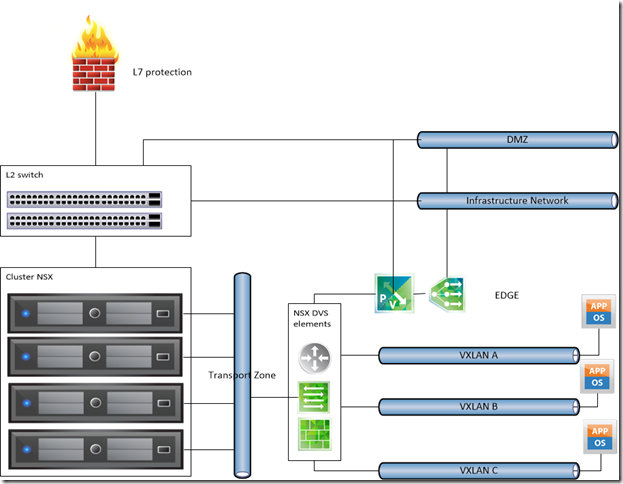

A new network design

Well! The question is: with these elements is it mandatory a physical firewall? I could say NO only if the north traffic is clean… in a other words: only if you are not directly connected to Internet. Watching what is going on about the newest the security attacks, I suggest a L7 protection using:

- A 3rd party plugin like F5, CheckPoint, TrendMicro

- Use a physical IDS/IPS

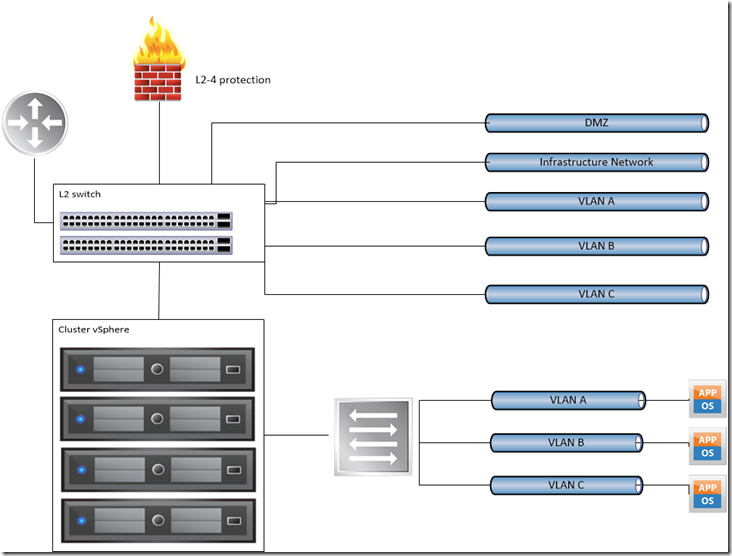

Thinking all virtual this could be a great solution:

Physically is really simple: a top of the rack switch (min 2 stacked) and a cluster of physical server with only 3 VLAN running across the entire host with a single dVS. Just few notes:

- consider the using of 10Gbe switch with 10 Gbe SFP+ NICs;

- in order to use VSAN consider a correct throughput (the best is a separate network infrastructure for this purpose);

- in you’re expanding this cluster across multiple racks, consider the “leaf-spine” network topology for production network.

And now you’re the King!

Notes and Sources

There’s a lot of documentation and integration, with some success cases. For study I really suggest the design guide available here: https://communities.vmware.com/docs/DOC-27683 (the major part of the images are taken from this document)

For newbie there is a classic “For dummies” guide that I found really well written for who is approaching to network virtualization with an eye to practical uses… Here the link to register and download a free copy

Near the literature, I suggest to practice with Hands on Lab to preview all these functionalities without wasting time for setting up a test environment: http://www.vmware.com/go/try-nsx-en